[ad_1]

ShadowRay is an publicity of the Ray synthetic intelligence (AI) framework infrastructure. This publicity is underneath energetic assault, but Ray disputes that the publicity is a vulnerability and doesn’t intend to repair it. The dispute between Ray’s builders and safety researchers highlights hidden assumptions and teaches classes for AI safety, internet-exposed property, and vulnerability scanning by an understanding of ShadowRay.

ShadowRay Defined

The AI compute platform Anyscale developed the open-source Ray AI framework, which is used primarily to handle AI workloads. The device boasts a buyer listing that features DoorDash, LinkedIn, Netflix, OpenAI, Uber, and plenty of others.

The safety researchers at Oligo Safety found CVE-2023-48022, dubbed ShadowRay, which notes that Ray fails to use authorization within the Jobs API. This publicity permits for any unauthenticated person with dashboard community entry to launch jobs and even arbitrary code execution on the host.

The researchers calculate that this vulnerability ought to earn a 9.8 (out of 10) utilizing the Widespread Vulnerability Scoring System (CVSS), but Anyscale denies that the publicity is a vulnerability. As an alternative, they keep that Ray is simply supposed for use inside a managed atmosphere and that the shortage of authorization is an supposed function.

ShadowRay Damages

Sadly, numerous prospects don’t appear to grasp Anyscale’s assumption that these environments gained’t be uncovered to the web. Oligo already detected lots of of uncovered servers that attackers have already compromised and categorized the compromise varieties as:

- Accessed SSH keys: Allow attackers to hook up with different digital machines within the internet hosting atmosphere, to realize persistence, and to repurpose computing capability.

- Extreme entry: Supplies attackers with entry to cloud environments through Ray root entry or Kubernetes clusters due to embedded API administrator permissions.

- Compromised AI workloads: Have an effect on the integrity of AI mannequin outcomes, permit for mannequin theft, and probably infect mannequin coaching to change future outcomes.

- Hijacked compute: Repurposes costly AI compute energy for attackers’ wants, primarily cryptojacking, which mines for cryptocurrencies on stolen assets.

- Stolen credentials: Expose different assets to compromise by uncovered passwords for OpenAI, Slack, Stripe, inner databases, AI databases, and extra.

- Seized tokens: Permit attackers entry to steal funds (Stripe), conduct AI provide chain assaults (OpenAI, Hugging Face, and many others.), or intercept inner communication (Slack).

If you happen to haven’t verified that inner Ray assets reside safely behind rigorous community safety controls, run the Anyscale instruments to find uncovered assets now.

ShadowRay Oblique Classes

Though the direct damages will likely be vital for victims, ShadowRay exposes hidden community safety assumptions ignored within the mad sprint to the cloud and for AI adoption. Let’s study these assumptions within the context of AI safety, web uncovered assets, and vulnerability scanning.

AI Safety Classes

Within the rush to harness AI’s perceived energy, firms put initiatives into the fingers of AI consultants who will naturally deal with their main goal: to acquire AI mannequin outcomes. A pure myopia, however firms ignore three key hidden points uncovered by ShadowRay: AI consultants lack safety experience, AI information wants encryption, and AI fashions want supply monitoring.

AI Consultants Lack Safety Experience

Anyscale assumes the atmosphere is safe simply as AI researchers additionally assume Ray is safe. Neil Carpenter, Discipline CTO at Orca Safety, notes, “If the endpoint isn’t going to be authenticated, it may no less than have community controls to dam entry exterior the fast subnet by default. It’s disappointing that the authors are disputing this CVE as being by-design moderately than working to handle it.”

Anyscale’s response in contrast with Ray’s precise use highlights that AI consultants don’t possess a safety mindset. The lots of of uncovered servers point out that many organizations want so as to add safety to their AI groups or embody safety oversight of their operations. Those who proceed to imagine safe techniques will endure information compliance breaches and different damages.

AI Knowledge Wants Encryption

Attackers simply detect and find unencrypted delicate info, particularly the info Oligo researchers describe because the “fashions or datasets [that] are the distinctive, non-public mental property that differentiates an organization from its rivals.”

The AI information turns into a single level of failure for information breaches and the publicity of firm secrets and techniques, but organizations budgeting hundreds of thousands on AI analysis neglect spending on the associated safety required to guard it. Happily, utility layer encryption (ALE) and different kinds of present encryption options may be bought so as to add safety to inner or exterior AI information modeling.

AI Fashions Want Supply Monitoring

As AI fashions digest info for modeling, AI programmers assume all information is nice information and that ‘garbage-in-garbage-out’ won’t ever apply to AI. Sadly, if attackers can insert false exterior information right into a coaching information set, the mannequin will likely be influenced, if not outright skewed. But AI information safety stays difficult.

“It is a quickly evolving area,” admits Carpenter. “Nevertheless … present controls will assist to guard towards future assaults on AI coaching materials; for instance, the primary traces of protection would come with limiting entry, each by id and on the community layer, and auditing entry to the info used to coach the AI fashions. Securing a provide chain that features AI coaching begins in the identical manner as securing another software program provide chain — a powerful basis.”

Conventional defenses assist inner AI information sources however change into exponentially extra sophisticated when incorporating information sources exterior of the group. Carpenter means that third-party information requires extra consideration to keep away from points comparable to “malicious poisoning of the info, copyright infringement, and implicit bias.” Knowledge scrubbing to keep away from these points will must be finished earlier than including the info to servers for AI mannequin coaching.

Maybe some researchers discover all outcomes as cheap, even AI hallucinations. But fictionalized or corrupted outcomes will mislead anybody making an attempt to use the leads to the actual world. A wholesome dose of cynicism must be utilized to the method to inspire monitoring the authenticity, validity, and acceptable use of AI-influencing information.

Web Uncovered Sources Classes

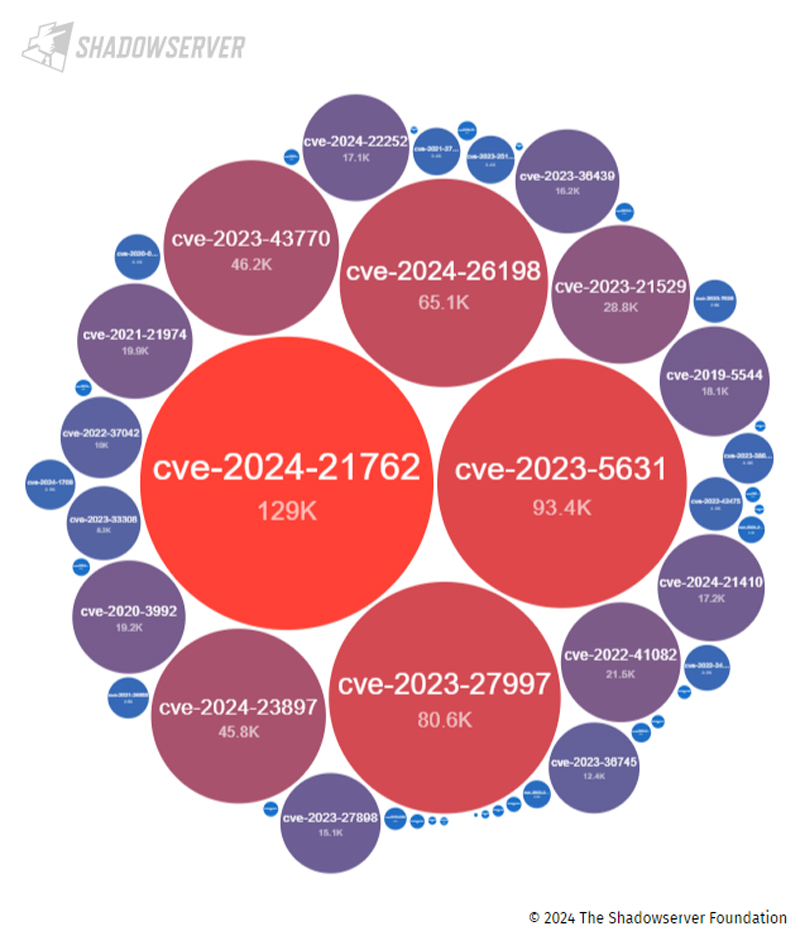

ShadowRay poses an issue as a result of the AI groups uncovered the infrastructure to public entry. Nevertheless, many others depart assets on the web accessible with vital safety vulnerabilities open to exploitation. For instance, the picture under depicts the lots of of hundreds of IP addresses with critical-level vulnerabilities that the Shadowserver Basis detected accessible from the web!

A seek for all ranges of vulnerabilities exposes hundreds of thousands of potential points but doesn’t even embody a disputed CVE comparable to ShadowRay or different unintentionally misconfigured and accessible infrastructure. Participating a cloud-native utility safety (CNAP) platform or perhaps a cloud useful resource vulnerability scanner may help detect uncovered vulnerabilities.

Sadly, scans require that AI growth groups and others deploying assets submit their assets to safety for monitoring or scanning. AI groups possible launch assets independently for budgeting and speedy deployment functions, however safety groups should nonetheless learn of their existence to use cloud safety finest practices to the infrastructure.

Vulnerability Scanning Classes

Anyscale’s dispute of CVE-2023-48022 places the vulnerability right into a grey zone together with the many different disputed CVE vulnerabilities. These vary from points that have but to be proved and might not be legitimate to these the place the product works as supposed, simply in an insecure trend (comparable to ShadowRay).

These disputed vulnerabilities advantage monitoring both by a vulnerability administration device or threat administration program. Additionally they advantage particular consideration due to two key classes uncovered by ShadowRay. First, vulnerability scanning instruments fluctuate in how they deal with disputed vulnerabilities, and second, these vulnerabilities want energetic monitoring and verification.

Look ahead to Variance in Disputed Vulnerability Dealing with

Completely different vulnerability scanners and menace feeds will deal with disputed vulnerabilities in another way. Some will omit disputed vulnerabilities, others may embody them as non-obligatory scans, and others may embody them as various kinds of points.

For instance, Carpenter reveals that “Orca took the method of addressing this as a posture threat as an alternative of a CVE-style vulnerability … It is a extra consumable method for organizations as a CVE would usually be addressed with an replace (which gained’t be out there right here) however a posture threat is addressed by a configuration change (which is the appropriate method for this drawback).” IT groups must actively observe how a selected device will deal with a selected vulnerability.

Actively Monitor & Confirm Vulnerabilities

Instruments promise to make processes simpler, however sadly, the straightforward button for safety merely doesn’t exist but. As regards to vulnerability scanners, it gained’t be apparent if an present device scans for a selected vulnerability.

Safety groups should actively comply with which vulnerabilities could have an effect on the IT atmosphere and test to confirm that the device checks for particular CVEs of concern. For disputed vulnerabilities, extra steps could also be wanted comparable to submitting a request with the vulnerability scanner assist workforce to confirm how the device will or gained’t deal with that particular vulnerability.

To additional scale back the chance of publicity, use a number of vulnerability scanning instruments and penetration assessments to validate the potential threat of found vulnerabilities or to find extra potential points. Within the case of ShadowRay, Anyscale offered one device, however free open-source vulnerability scanning instruments also can present helpful extra assets.

Backside Line: Examine & Recheck for Vital Vulnerabilities

You don’t need to be susceptible to ShadowRay to understand the oblique classes that the difficulty teaches about AI dangers, internet-exposed property, and vulnerability scanning. Precise penalties are painful, however steady scanning for potential vulnerabilities on vital infrastructure can find points to resolve earlier than an attacker can ship harm.

Pay attention to limitations for vulnerability scanning instruments, AI modeling, and workers dashing to deploy cloud assets. Create mechanisms for groups to collaborate for higher safety and implement a system to constantly monitor for potential vulnerabilities by analysis, menace feeds, vulnerability scanners, and penetration testing.

For extra assist in studying about potential threats, think about studying about menace intelligence feeds.

[ad_2]