[ad_1]

Perform Calling isn’t one thing new. In July 2023, OpenAI launched Perform Calling for his or her GPT fashions, a function now being adopted by rivals. Google’s Gemini API lately supported it, and Anthropic is integrating it into Claude. Perform Calling is turning into important for giant language fashions (LLMs), enhancing their capabilities. All of the extra helpful to be taught this method!

With this in thoughts, I intention to write down a complete tutorial masking Perform Calling past primary introductions (there are already loads of tutorials for it). The main focus will likely be on sensible implementation, constructing a totally autonomous AI agent and integrating it with Streamlit for a ChatGPT-like interface. Though OpenAI is used for demonstration, this tutorial could be simply tailored for different LLMs supporting Perform Calling, akin to Gemini.

Perform Calling permits builders to explain capabilities (aka instruments, you may think about this as actions for the mannequin to take, like performing calculation, or making an order), and have the mannequin intelligently select to output a JSON object containing arguments to name these capabilities. In less complicated phrases, it permits for:

- Autonomous determination making: Fashions can intelligently select instruments to answer questions.

- Dependable parsing: Responses are in JSON format, as a substitute of the extra typical dialogue-like response. It may not appear a lot from the primary look, however that is what permits LLM to connect with exterior programs, say by way of APIs with structured inputs.

It opens up quite a few prospects:

- Autonomous AI assistants: Bots can work together with inner programs for duties like buyer orders and returns, past offering solutions to enquiries

- Private analysis assistants: Say if you’re planning on your journey, assistants can search the online, crawl content material, evaluate choices, and summarize ends in Excel.

- IoT voice instructions: Fashions can management gadgets or counsel actions based mostly on detected intents, akin to adjusting the AC temperature.

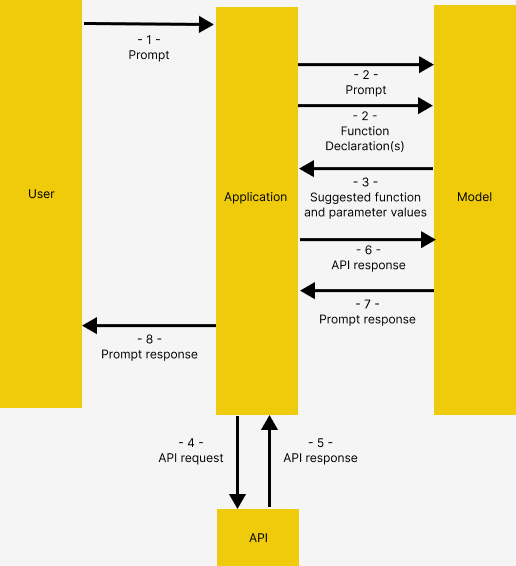

Borrowing from Gemini’s Perform Calling documentation, Perform Calling has the beneath construction, which works the identical in OpenAI

- Consumer points immediate to the appliance

- Software passes the user-provided immediate, and the Perform Declaration(s), which is an outline of the software(s) that the mannequin may use

- Based mostly on the Perform Declaration, the mannequin suggests the software to make use of, and the related request parameters. Discover the mannequin outputs the instructed software and parameters solely, WITHOUT truly calling the capabilities

- & 5. Based mostly on the response, the appliance invokes the related API

6. & 7. The response from API is fed into the mannequin once more to output a human-readable response

8. Software returns the ultimate response to the consumer, then repeat from 1.

This may appear convuluted, however the idea will likely be illustrated intimately with instance

Earlier than diving into the code, a couple of phrases concerning the demo utility’s structure

Answer

Right here we construct an assistant for vacationers visiting a resort. The assistant has entry to the next instruments, which permits the assistant to entry exterior functions.

get_items,purchase_item: Hook up with product catalog saved in database by way of API, for retrieving merchandise checklist and making a purchase order respectivelyrag_pipeline_func: Hook up with doc retailer with Retrieval Augmented Technology (RAG) to acquire info from unstructured texts e.g. resort’s brochures

Tech stack

Now let’s start!

Preparation

Head over to Github to clone my code. The contents beneath could be discovered within the function_calling_demo Pocket book.

Please additionally create and activate a digital setting, then pip set up -r necessities.txt to put in the required packages

Initialization

We first hook up with OpenRouter. Alternatively utilizing the unique OpenAIChatGenerator with out overwritting the api_base_urlwould additionally work, supplied you’ve got an OpenAI API key

import os

from dotenv import load_dotenv

from haystack.elements.mills.chat import OpenAIChatGenerator

from haystack.utils import Secret

from haystack.dataclasses import ChatMessage

from haystack.elements.mills.utils import print_streaming_chunk# Set your API key as setting variable earlier than executing this

load_dotenv()

OPENROUTER_API_KEY = os.environ.get('OPENROUTER_API_KEY')

chat_generator = OpenAIChatGenerator(api_key=Secret.from_env_var("OPENROUTER_API_KEY"),

api_base_url="https://openrouter.ai/api/v1",

mannequin="openai/gpt-4-turbo-preview",

streaming_callback=print_streaming_chunk)

Then we take a look at can the chat_generator be efficiently invoked

chat_generator.run(messages=[ChatMessage.from_user("Return this text: 'test'")])

---------- The response ought to appear to be this ----------

{'replies': [ChatMessage(content="'test'", role=<ChatRole.ASSISTANT: 'assistant'>, name=None, meta={'model': 'openai/gpt-4-turbo-preview', 'index': 0, 'finish_reason': 'stop', 'usage': {}})]}

Step 1: Set up information retailer

Right here we set up connection between our utility and the 2 information sources: Doc retailer for unstructured texts, and utility database by way of API

Index Paperwork with a Pipeline

We offer pattern texts in paperwork for the mannequin to carry out Retrival Augmented Technology (RAG). The texts are became embeddings and saved in an in-memory doc retailer

from haystack import Pipeline, Doc

from haystack.document_stores.in_memory import InMemoryDocumentStore

from haystack.elements.writers import DocumentWriter

from haystack.elements.embedders import SentenceTransformersDocumentEmbedder# Pattern paperwork

paperwork = [

Document(content="Coffee shop opens at 9am and closes at 5pm."),

Document(content="Gym room opens at 6am and closes at 10pm.")

]

# Create the doc retailer

document_store = InMemoryDocumentStore()

# Create a pipeline to show the texts into embeddings and retailer them within the doc retailer

indexing_pipeline = Pipeline()

indexing_pipeline.add_component(

"doc_embedder", SentenceTransformersDocumentEmbedder(mannequin="sentence-transformers/all-MiniLM-L6-v2")

)

indexing_pipeline.add_component("doc_writer", DocumentWriter(document_store=document_store))

indexing_pipeline.join("doc_embedder.paperwork", "doc_writer.paperwork")

indexing_pipeline.run({"doc_embedder": {"paperwork": paperwork}})

It ought to output this, comparable to the paperwork we created as pattern

{'doc_writer': {'documents_written': 2}}

Spin up API server

An API server made with Flask is created underneath db_api.py to connect with SQLite. Please spin it up by operating python db_api.py in your terminal

Additionally discover that some preliminary information has been added in db_api.py

Step 2: Outline the capabilities

Right here we put together the precise capabilities for the mannequin to invoke AFTER Perform Calling (Step 4–5 as described in The Construction of Perform Calling)

RAG operate

Specifically the rag_pipeline_func. That is for the mannequin to supply a solution by looking out by means of the texts saved within the Doc Retailer. We first outline the RAG retrieval as a Haystack pipeline

from haystack.elements.embedders import SentenceTransformersTextEmbedder

from haystack.elements.retrievers.in_memory import InMemoryEmbeddingRetriever

from haystack.elements.builders import PromptBuilder

from haystack.elements.mills import OpenAIGeneratortemplate = """

Reply the questions based mostly on the given context.

Context:

{% for doc in paperwork %}

{{ doc.content material }}

{% endfor %}

Query: {{ query }}

Reply:

"""

rag_pipe = Pipeline()

rag_pipe.add_component("embedder", SentenceTransformersTextEmbedder(mannequin="sentence-transformers/all-MiniLM-L6-v2"))

rag_pipe.add_component("retriever", InMemoryEmbeddingRetriever(document_store=document_store))

rag_pipe.add_component("prompt_builder", PromptBuilder(template=template))

# Notice to llm: We're utilizing OpenAIGenerator, not the OpenAIChatGenerator, as a result of the latter solely accepts Listing[str] as enter and can't settle for prompt_builder's str output

rag_pipe.add_component("llm", OpenAIGenerator(api_key=Secret.from_env_var("OPENROUTER_API_KEY"),

api_base_url="https://openrouter.ai/api/v1",

mannequin="openai/gpt-4-turbo-preview"))

rag_pipe.join("embedder.embedding", "retriever.query_embedding")

rag_pipe.join("retriever", "prompt_builder.paperwork")

rag_pipe.join("prompt_builder", "llm")

Check if the operate works

question = “When does the espresso store open?”

rag_pipe.run({"embedder": {"textual content": question}, "prompt_builder": {"query": question}})

This could yield the next output. Discover the replies that the mannequin gave is from the pattern paperwork we supplied earlier than

{'llm': {'replies': ['The coffee shop opens at 9am.'],

'meta': [{'model': 'openai/gpt-4-turbo-preview',

'index': 0,

'finish_reason': 'stop',

'usage': {'completion_tokens': 9,

'prompt_tokens': 60,

'total_tokens': 69,

'total_cost': 0.00087}}]}}

We are able to then flip the rag_pipe right into a operate, which gives the replies solely with out including within the different particulars

def rag_pipeline_func(question: str):

consequence = rag_pipe.run({"embedder": {"textual content": question}, "prompt_builder": {"query": question}})return {"reply": consequence["llm"]["replies"][0]}

API calls

We outline the get_items and purchase_itemcapabilities for interacting with the database

# Flask's default native URL, change it if crucial

db_base_url = 'http://127.0.0.1:5000'# Use requests to get the information from the database

import requests

import json

# get_categories is provided as a part of the immediate, it's not used as a software

def get_categories():

response = requests.get(f'{db_base_url}/class')

information = response.json()

return information

def get_items(ids=None,classes=None):

params = {

'id': ids,

'class': classes,

}

response = requests.get(f'{db_base_url}/merchandise', params=params)

information = response.json()

return information

def purchase_item(id,amount):

headers = {

'Content material-type':'utility/json',

'Settle for':'utility/json'

}

information = {

'id': id,

'amount': amount,

}

response = requests.publish(f'{db_base_url}/merchandise/buy', json=information, headers=headers)

return response.json()

Outline the software checklist

Now that we’ve outlined the fuctions, we have to let the mannequin acknowledge these capabilities, and to instruct them how they’re used, by offering descriptions for them.

Since we’re utilizing OpenAI right here, the instruments is formatted as beneath following the format required by Open AI

instruments = [

{

"type": "function",

"function": {

"name": "get_items",

"description": "Get a list of items from the database",

"parameters": {

"type": "object",

"properties": {

"ids": {

"type": "string",

"description": "Comma separated list of item ids to fetch",

},

"categories": {

"type": "string",

"description": "Comma separated list of item categories to fetch",

},

},

"required": [],

},

}

},

{

"kind": "operate",

"operate": {

"title": "purchase_item",

"description": "Buy a selected merchandise",

"parameters": {

"kind": "object",

"properties": {

"id": {

"kind": "string",

"description": "The given product ID, product title isn't accepted right here. Please acquire the product ID from the database first.",

},

"amount": {

"kind": "integer",

"description": "Variety of objects to buy",

},

},

"required": [],

},

}

},

{

"kind": "operate",

"operate": {

"title": "rag_pipeline_func",

"description": "Get info from resort brochure",

"parameters": {

"kind": "object",

"properties": {

"question": {

"kind": "string",

"description": "The question to make use of within the search. Infer this from the consumer's message. It ought to be a query or an announcement",

}

},

"required": ["query"],

},

},

}

]

Step 3: Placing all of it collectively

We now have the required inputs to check Perform Calling! Right here we do a couple of issues:

- Present the preliminary immediate to the mannequin, to offer it some context

- Present a pattern user-generated message

- Most significantly, we go the software checklist to the chat generator in

instruments

# 1. Preliminary immediate

context = f"""You might be an assistant to vacationers visiting a resort.

You might have entry to a database of things (which incorporates {get_categories()}) that vacationers should buy, you even have entry to the resort's brochure.

If the vacationer's query can't be answered from the database, you may check with the brochure.

If the vacationer's query can't be answered from the brochure, you may ask the vacationer to ask the resort employees.

"""

messages = [

ChatMessage.from_system(context),

# 2. Sample message from user

ChatMessage.from_user("Can I buy a coffee?"),

]# 3. Passing the instruments checklist and invoke the chat generator

response = chat_generator.run(messages=messages, generation_kwargs= {"instruments": instruments})

response

---------- Response ----------

{'replies': [ChatMessage(content='[{"index": 0, "id": "call_AkTWoiJzx5uJSgKW0WAI1yBB", "function": {"arguments": "{"categories":"Food and beverages"}", "name": "get_items"}, "type": "function"}]', position=<ChatRole.ASSISTANT: 'assistant'>, title=None, meta={'mannequin': 'openai/gpt-4-turbo-preview', 'index': 0, 'finish_reason': 'tool_calls', 'utilization': {}})]}

Now let’s examine the response. Discover how the Perform Calling returns each the operate chosen by the mannequin, and the arguments for invoking the chosen operate.

function_call = json.masses(response["replies"][0].content material)[0]

function_name = function_call["function"]["name"]

function_args = json.masses(function_call["function"]["arguments"])

print("Perform Identify:", function_name)

print("Perform Arguments:", function_args)

---------- Response ----------

Perform Identify: get_items

Perform Arguments: {‘classes’: ‘Meals and drinks’}

When offered with one other query, the mannequin will use one other software that’s extra related

# One other query

messages.append(ChatMessage.from_user("The place's the espresso store?"))# Invoke the chat generator, and passing the instruments checklist

response = chat_generator.run(messages=messages, generation_kwargs= {"instruments": instruments})

function_call = json.masses(response["replies"][0].content material)[0]

function_name = function_call["function"]["name"]

function_args = json.masses(function_call["function"]["arguments"])

print("Perform Identify:", function_name)

print("Perform Arguments:", function_args)

---------- Response ----------

Perform Identify: rag_pipeline_func

Perform Arguments: {'question': "The place's the espresso store?"}

Once more, discover that no precise operate is invoked right here, that is what we are going to do subsequent!

Calling the operate

We are able to then feed the arguments into the chosen operate

## Discover the correspoding operate and name it with the given arguments

available_functions = {"get_items": get_items, "purchase_item": purchase_item,"rag_pipeline_func": rag_pipeline_func}

function_to_call = available_functions[function_name]

function_response = function_to_call(**function_args)

print("Perform Response:", function_response)

---------- Response ----------

Perform Response: {'reply': 'The supplied context doesn't specify a bodily location for the espresso store, solely its working hours. Subsequently, I can't decide the place the espresso store is positioned based mostly on the given info.'}

The response from rag_pipeline_func can then handed as a context to the chat by appending it underneath the messages, for the mannequin to supply the ultimate reply

messages.append(ChatMessage.from_function(content material=json.dumps(function_response), title=function_name))

response = chat_generator.run(messages=messages)

response_msg = response["replies"][0]print(response_msg.content material)

---------- Response ----------

For the situation of the espresso store inside the resort, I like to recommend asking the resort employees instantly. They'll be capable to information you to it precisely.

We now have accomplished the chat cycle!

Step 4: Flip into an interactive chat

The code above exhibits how Perform Calling could be achieved, however we wish to go a step additional by turning it into an interactive chat

Right here I showcase two strategies to do it, from the extra primitive enter() that prints the dialogue into the pocket book itself, to rendering it by means of Streamlit to supply it with an ChatGPT-like UI

enter() loop

The code is copied from Haystack’s tutorial, which permits us to rapidly take a look at the mannequin. Notice: This utility is created to show the concept of Perform Calling, and is NOT meant to be completely strong e.g. supporting the order of a number of objects on the identical time, no hallucination, and so forth.

import json

from haystack.dataclasses import ChatMessage, ChatRoleresponse = None

messages = [

ChatMessage.from_system(context)

]

whereas True:

# if OpenAI response is a software name

if response and response["replies"][0].meta["finish_reason"] == "tool_calls":

function_calls = json.masses(response["replies"][0].content material)

for function_call in function_calls:

## Parse operate calling info

function_name = function_call["function"]["name"]

function_args = json.masses(function_call["function"]["arguments"])

## Discover the correspoding operate and name it with the given arguments

function_to_call = available_functions[function_name]

function_response = function_to_call(**function_args)

## Append operate response to the messages checklist utilizing `ChatMessage.from_function`

messages.append(ChatMessage.from_function(content material=json.dumps(function_response), title=function_name))

# Common Dialog

else:

# Append assistant messages to the messages checklist

if not messages[-1].is_from(ChatRole.SYSTEM):

messages.append(response["replies"][0])

user_input = enter("ENTER YOUR MESSAGE 👇 INFO: Kind 'exit' or 'give up' to stopn")

if user_input.decrease() == "exit" or user_input.decrease() == "give up":

break

else:

messages.append(ChatMessage.from_user(user_input))

response = chat_generator.run(messages=messages, generation_kwargs={"instruments": instruments})

Whereas it really works, we would wish to have one thing that appears nicer.

Streamlit interface

Streamlit turns information scripts into shareable net apps, which gives a neat UI for our utility. The code proven above are tailored right into a Streamlit utility underneath the streamlit folder of my repo

You may run it by:

- When you have not achieved so already, spin up the API server with

python db_api.py - Set the OPENROUTER_API_KEY as setting variable e.g.

export OPENROUTER_API_KEY = ‘@REPLACE WITH YOUR API KEY’assuming you might be on Linux / executing with git bash - Navigate to the

streamlitfolder within the terminal withcd streamlit - Run Streamlit with

streamlit run app.py. A brand new tab ought to be routinely created in your browser operating the appliance

That’s mainly it! I hope you take pleasure in this text.

*Except in any other case famous, all photos are by the writer

[ad_2]